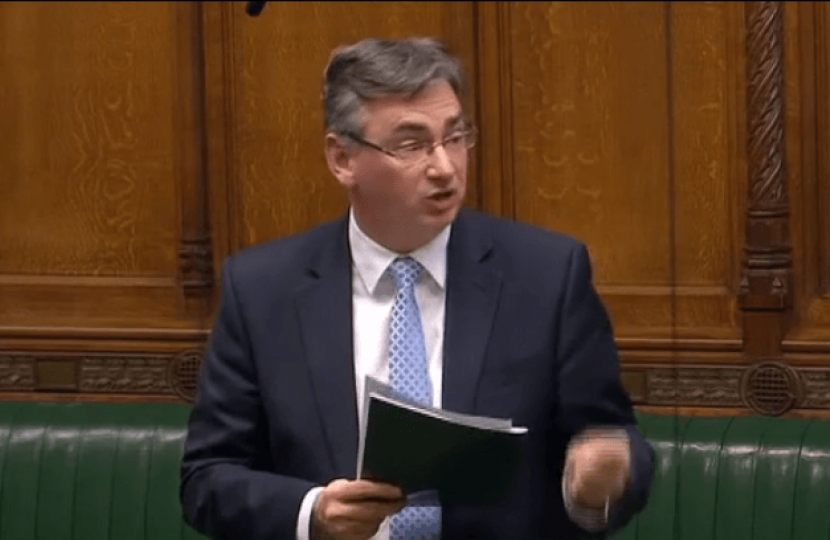

Julian Knight delivered a speech in the House of Commons on the Online Safety Bill on 19 April 2022:

Some colleagues have been in touch with me to ask my view on one overriding matter relating to this Bill: does it impinge on our civil liberties and our freedom of speech? I say to colleagues that it does neither, and I will explain how I have come to that conclusion.

In the mid-1990s, when social media and the internet were in their infancy, the forerunners of the likes of Google scored a major win in the United States. Effectively, they got the US Congress to agree to the greatest “get out of jail free” card in history: namely, to agree that social media platforms are not publishers and are not responsible for the content they carry. That has led to a huge flowering of debate, knowledge sharing and connections between people, the likes of which humanity has never seen before. We should never lose sight of that in our drive to fairly regulate this space. However, those platforms have also been used to cause great harm in our society, and because of their “get out of jail free” card, the platforms have not been accountable to society for the wrongs that are committed through them.

That is quite simplistic. I emphasise that as time has gone by, social media platforms have to some degree recognised that they have responsibilities, and that the content they carry is not without impact on society—the very society that they make their profits from, and that nurtured them into existence. Content moderation has sprung up, but it has been a slow process. It is only a few years ago that Google, a company whose turnover is higher than the entire economy of the Netherlands, was spending more on free staff lunches than on content moderation.

Content moderation is decided by algorithms, based on terms and conditions drawn up by the social media companies without any real public input. That is an inadequate state of affairs. Furthermore, where platforms have decided to act, there has been little accountability, and there can be unnecessary takedowns, as well as harmful content being carried. Is that democratic? Is it transparent? Is it right?

These masters of the online universe have a huge amount of power—more than any industrialist in our history—without facing any form of public scrutiny, legal framework or, in the case of unwarranted takedowns, appeal. I am pleased that the Government have listened in part to the recommendations published by the Digital, Culture, Media and Sport Committee, in particular on Parliament’s being given control through secondary legislation over legal but harmful content and its definition—an important safeguard for this legislation. However, the Committee and I still have queries about some of the Bill’s content. Specifically, we are concerned about the risks of cross-platform grooming and bread- crumbing—perpetrators using seemingly innocuous content to trap a child into a sequence of abuse. We also think that it is a mistake to focus on category 1 platforms, rather than extending the provisions to other platforms such as Telegram, which is a major carrier of disinformation. We need to recalibrate to a more risk-based approach, rather than just going by the numbers. These concerns are shared by charities such as the National Society for the Prevention of Cruelty to Children, as the hon. Member for Manchester Central (Lucy Powell) said.

On a systemic level, consideration should be given to allowing organisations such as the Internet Watch Foundation to identify where companies are failing to meet their duty of care, in order to prevent Ofcom from being influenced and captured by the heavy lobbying of the tech industry. There has been reference to the lawyers that the tech industry will deploy. If we look at any newspaper or LinkedIn, we see that right now, companies are recruiting, at speed, individuals who can potentially outgun regulation. It would therefore be sensible to bring in outside elements to provide scrutiny, and to review matters as we go forward.

On the culture of Ofcom, there needs to be greater flexibility. Simply reacting to a large number of complaints will not suffice. There needs to be direction and purpose, particularly with regard to the protection of children. We should allow for some forms of user advocacy at a systemic level, and potentially at an individual level, where there is extreme online harm.

On holding the tech companies to account, I welcome the sanctions regime and having named individuals at companies who are responsible. However, this Bill gives us an opportunity to bring about real culture change, as has happened in financial services over the past two decades. During Committee, the Government should actively consider the suggestion put forward by my Committee—namely, the introduction of compliance officers to drive safety by design in these companies.

Finally, I have concerns about the definition of “news publishers”. We do not want Ofcom to be effectively a regulator or a licensing body for the free press. However, I do not want in any way to do down this important and improved Bill.